Publications

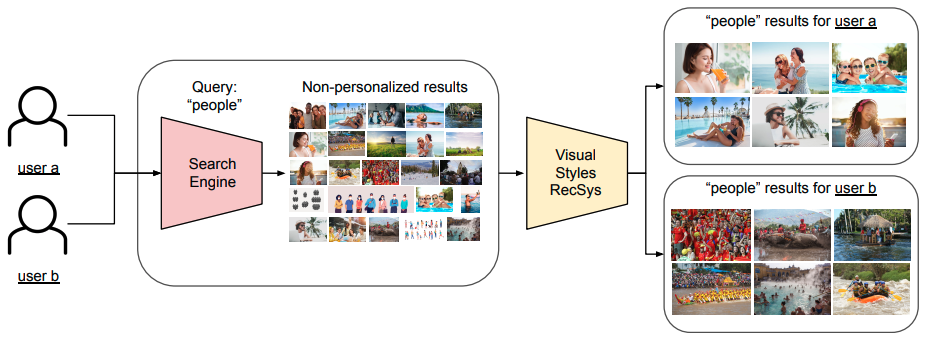

Learning Users’ Preferred Visual Styles in an Image Marketplace

Raul Gomez, Lauren Burnham-King, Alessandra Sala. Shutterstock. ACM RecSys, 2022. [PDF] [Slides] [Poster] [Video]

After my PhD I joined Shutterstock where I’ve been working on Recommender Systems. In this article we present Visual Styles RecSys, a model that learns users’ visual style preferences transversal to the projects they work on, and which aims to personalise the content served at Shutterstock. It was presented as an oral in ACM RecSys ‘22 industrial track. A BlogPost and an extended technical report about this work have also been published.

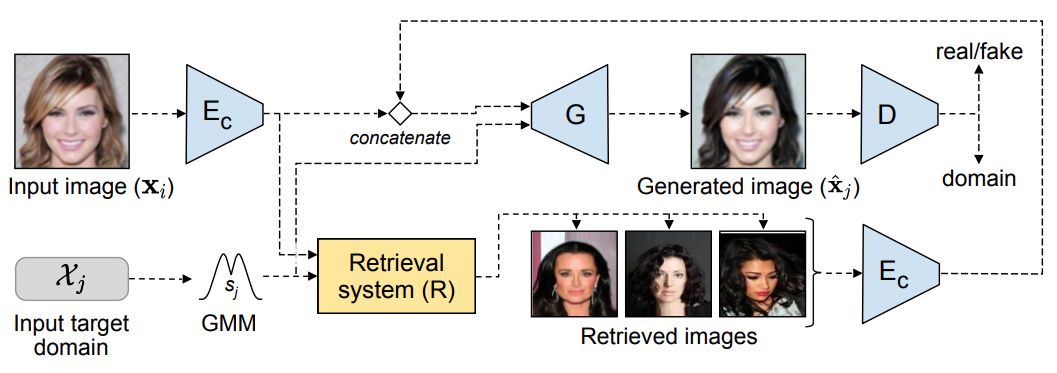

Retrieval Guided Unsupervised Multi-Domain Image to Image Translation

Raul Gomez*,Yahui Liu*, Marco De Nadai, Nicu Sebe, Bruno Lepri, Dimosthenis Karatzas.

ACM MM, 2020. [PDF] [Slides] [Video]

We propose using an image retrieval system to boost the performance of an image to image translation system, experimenting with a dataset of face images.

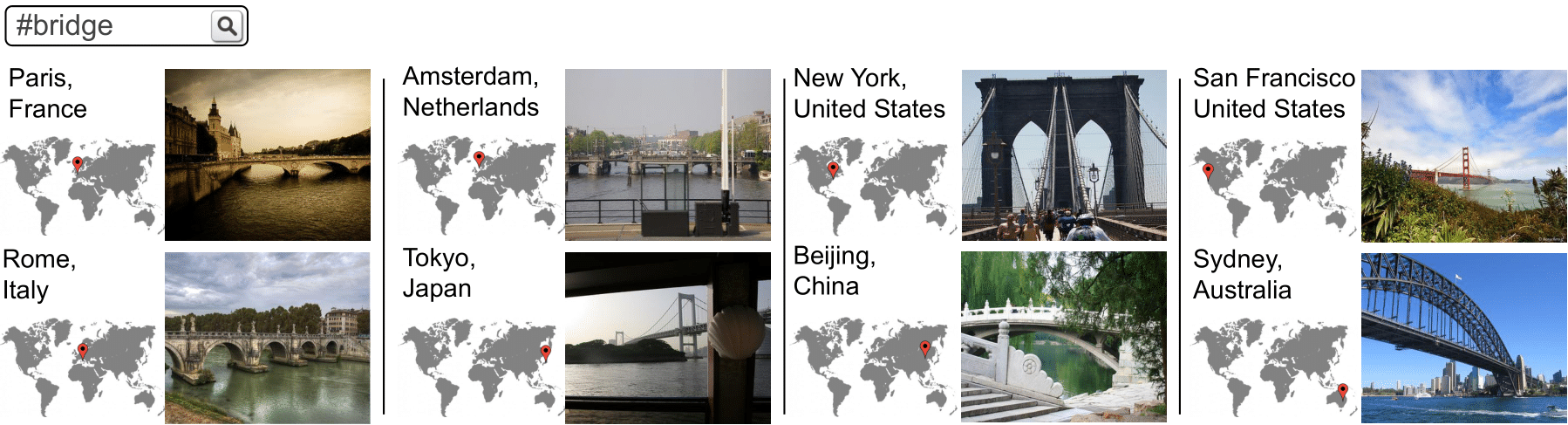

Location Sensitive Image Retrieval and Tagging

Raul Gomez, Jaume Gibert, Lluis Gomez, Dimosthenis Karatzas.

ECCV, 2020. [PDF] [Poster] [Video]

We design a model to retrieve images related to a query hashtag and near to a given location, and to tag images exploiting their location information.

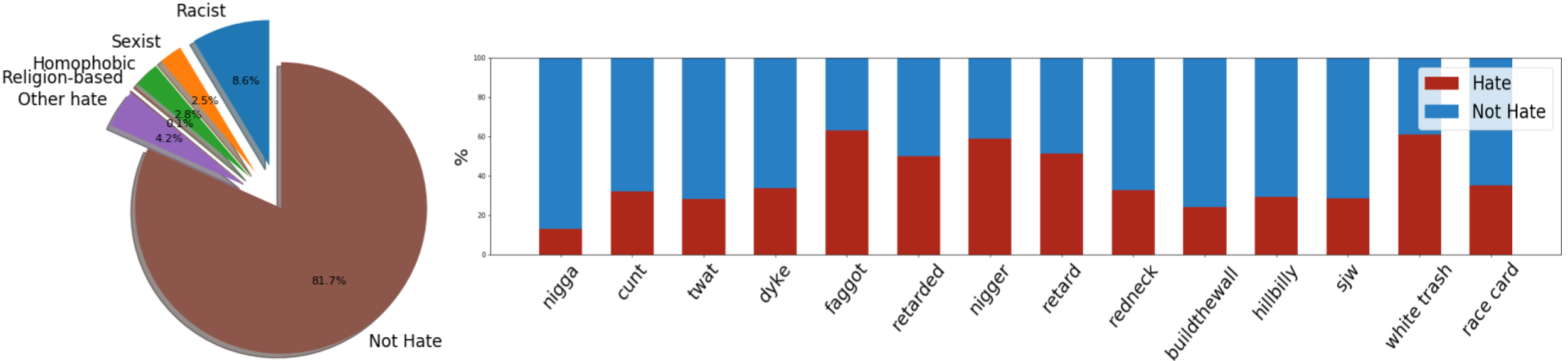

Exploring Hate Speech Detection in Multimodal Publications

Raul Gomez, Jaume Gibert, Lluis Gomez, Dimosthenis Karatzas.

Winter Conference on Applications of Computer Vision, 2020. [PDF] [Slides] [Poster]

The problem of hate speech detection in multimodal publications formed by a text and an image is targeted. A large scale dataset from Twitter, MMHS150K, is created and different models that jointly analyze textual and visual information for hate speech detection are proposed.

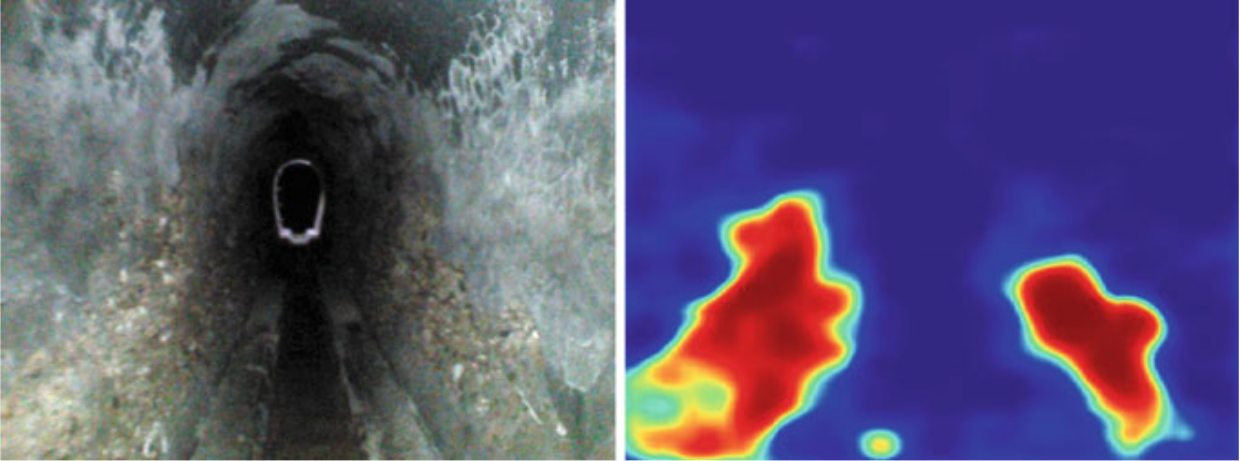

ARSI: An Aerial Robot for Sewer Inspection

François Chataigner, Pedro Cavestany, Marcel Soler, Carlos Rizzo, Jesus-Pablo Gonzalez, Carles Bosch, Jaume Gibert, Antonio Torrente, Raúl Gomez, Daniel Serrano.

Advances in Robotics Research: From Lab to Market, 2019. [PDF]

A robotic system, consisting on a drone with several cameras and sensors, is designed to make the work of sewer inspection brigades safer and more efficient. My contribution to this work is a FCN able to detect defects in the sewer manifested as irregular texture on its walls.

Selective Style Transfer for Text

Raul Gomez*, Ali Furkan Biten*, Lluis Gomez, Jaume Gibert, Marçal Rusiñol, Dismosthenis Karatzas.

ICDAR, 2019. [PDF] [Poster]

A selective style transfer model is trained to learn text styles and transfer them to text instances found in images. Experiments in different text domains (scene text, machine printed text and handwritten text) show the potential of text style transfer in different applications.

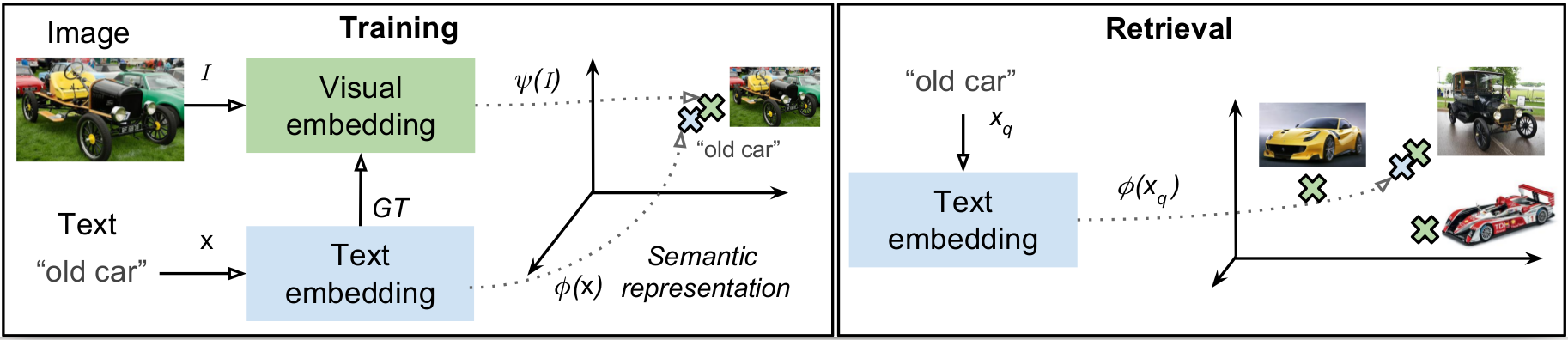

Self-Supervised Learning from Web Data for Multimodal Retrieval

Raul Gomez, Lluis Gomez, Jaume Gibert, Dismosthenis Karatzas.

Book Chapter submitted to Multi-Modal Scene Understanding. [PDF]

An extended version of the ECCVW article linked below containing extra experiments, qualitative results and a deeper analysis.

Learning to Learn from Web Data through Deep Semantic Embeddings

Raul Gomez, Lluis Gomez, Jaume Gibert, Dismosthenis Karatzas.

ECCV MULA workshop (Oral), 2018. [PDF] [Slides] [Poster]

A performance comparison between different text embeddings for self-supervised learning from images and associated text in an image retrieval by text setup.

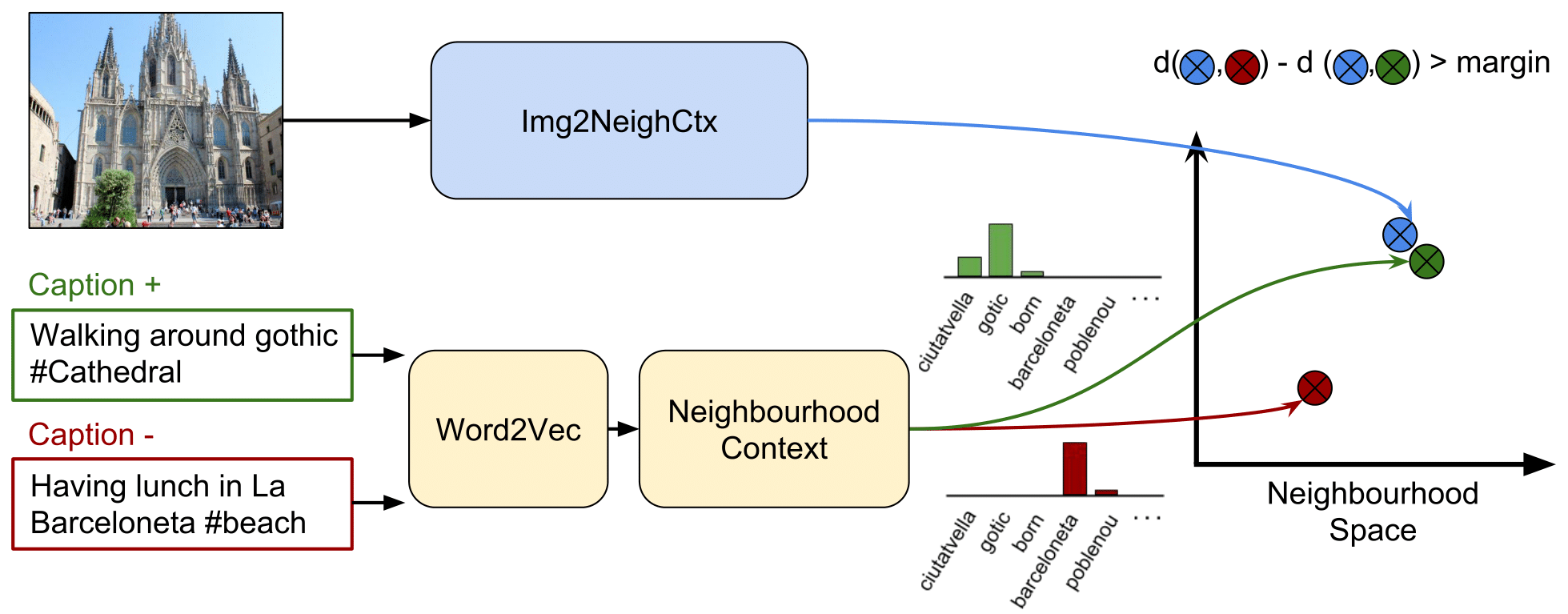

Learning from Barcelona Instagram data what Locals and Tourists post about its Neighbourhoods

Raul Gomez, Lluis Gomez, Jaume Gibert, Dismosthenis Karatzas.

ECCV MULA workshop, 2018. [PDF] [Poster]

Learning relations between text, images and Barcelona neighbourhoods to study the differences between locals and tourists in social media publications and their relations with the different neighbourhoods.

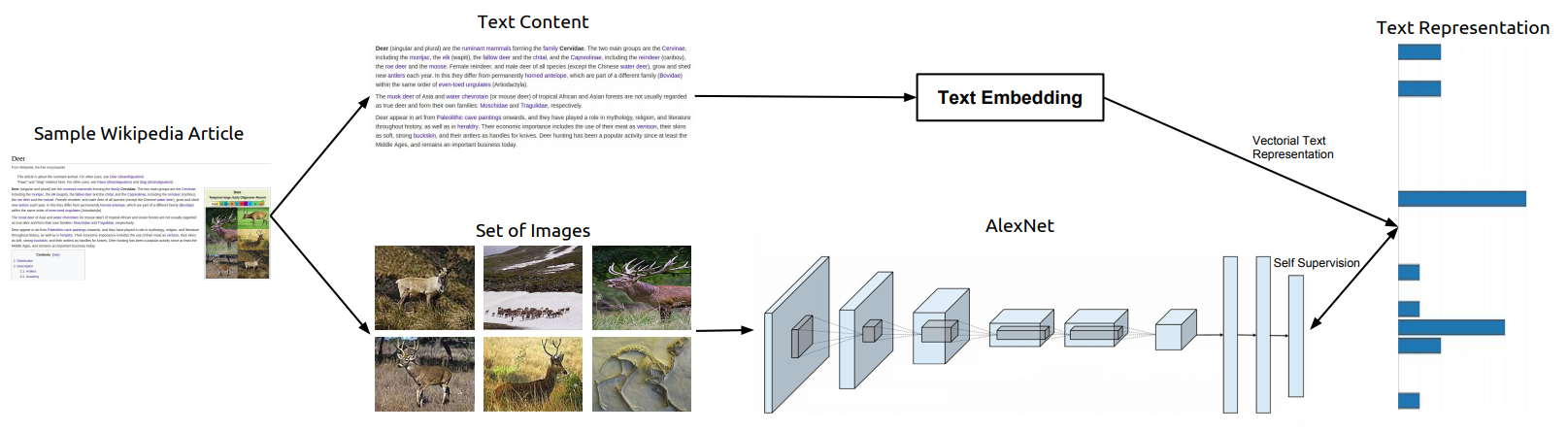

TextTopicNet - Self-Supervised Learning of Visual Features Through Embedding Images on Semantic Text Spaces

Yash Patel, Lluis Gomez, Raul Gomez, Marçal Rusiñol, Dismosthenis Karatzas and CV Jawahar.

arXiv preprint arXiv:1807.02110, 2018. [PDF]

This work proposes a self-supervised method to learn competitive visual features from wikipedia articles and its associated images.

ICDAR2017 Robust Reading Challenge on COCO-Text

Raul Gomez, Baoguang Shi, Lluis Gomez, Lukas Numann, Andreas Veit, Jiri Matas, Serge Belongie and Dismosthenis Karatzas.

ICDAR, 2017. [PDF]

I organized the ICDAR 2017 competition on COCO-Text within the Robust Reading Competition framework and wrote this competition report. Tasks were text localization, cropped words recognition and end to end. Though the competition is over, the platform is still open for submissions.

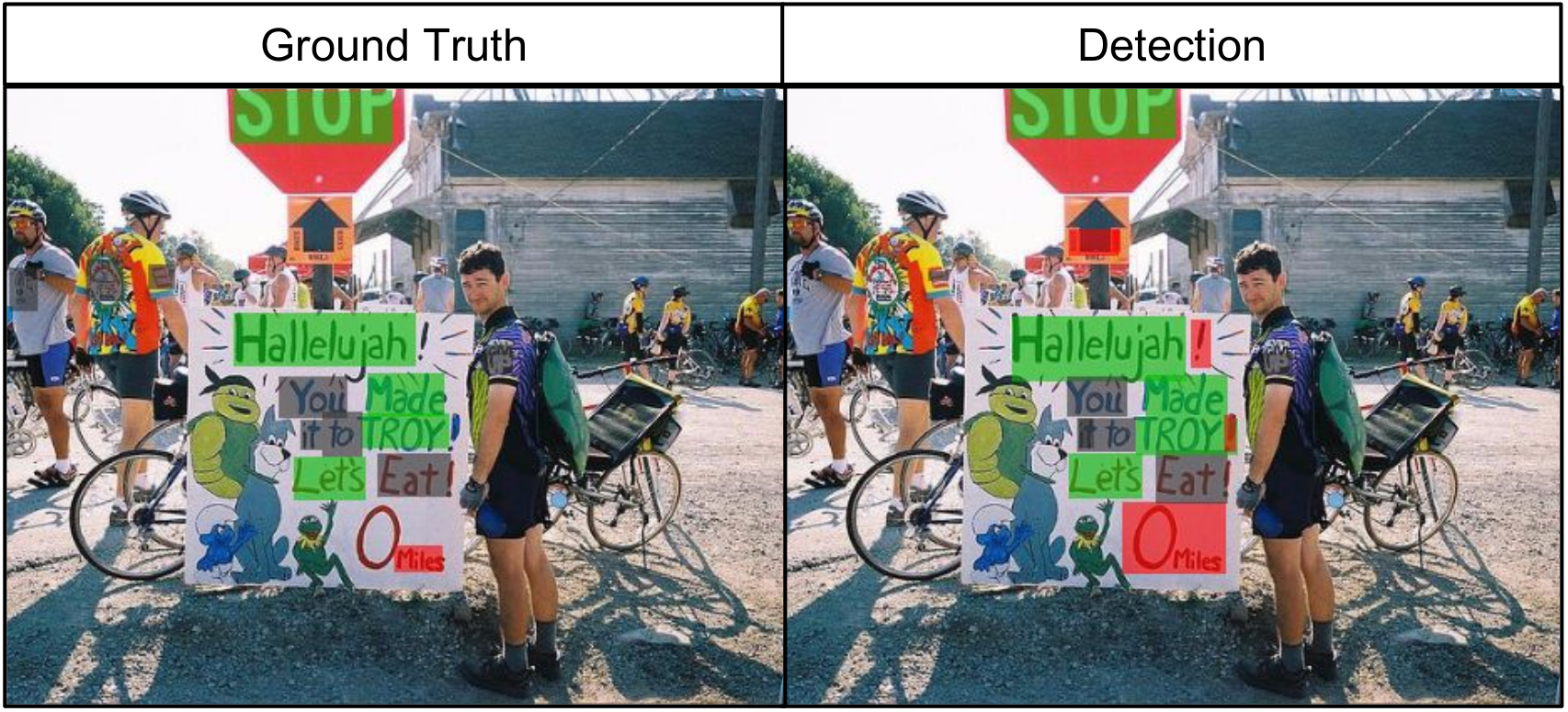

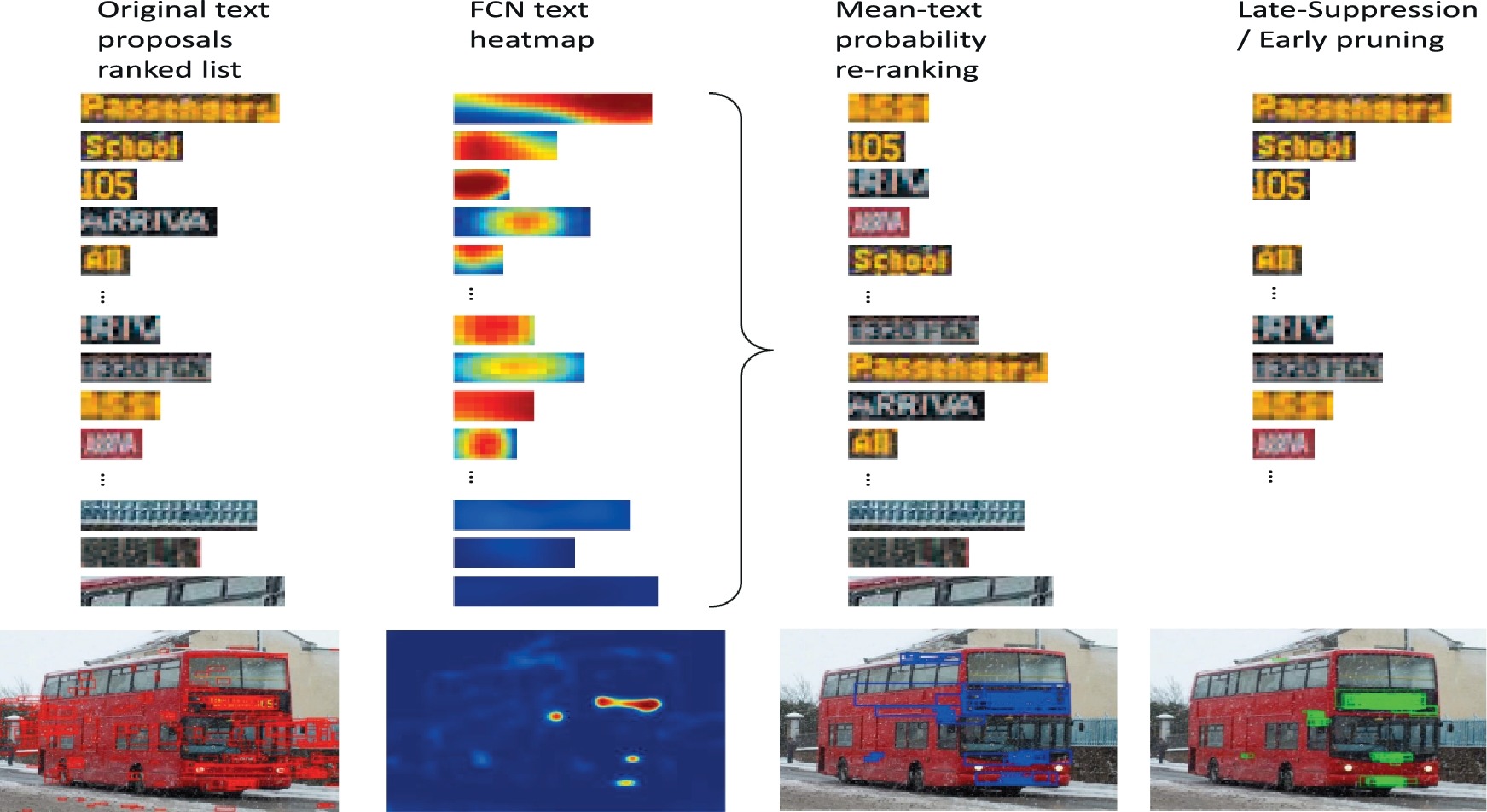

FAST: Facilitated and Accurate Scene Text Proposals through FCN Guided Pruning

Dena Bazazian, Raul Gomez, Anguelos Nicolaou, Lluis Gomez, Dimosthenis Karatzas and Andrew Bagdanov.

Pattern Recognition Letters, 2017. [PDF]

The former DLPR workshop publication lead to this journal publication. We extended our experiments and we improved our algorithm by using the FCN heatmaps to suppress the non-textual regions at the beggining of the text proposals stage, achieving a more efficient pipeline.

Improving Text Proposals for Scene Images with Fully Convolutional Networks

Dena Bazazian, Raul Gomez, Anguelos Nicolaou, Lluis Gomez, Dimosthenis Karatzas and Andrew Bagdanov.

ICPR DLPR workshop, 2016. [PDF]

This came out from my MS’s thesis. It’s about how to use a text detection FCN to improve the text proposals algorithm (developed by Lluis Gomez i Bigorda, one of my advisors). The code for the FCN model training is here and the code for the text proposals pipeline is here. Watching the FCN detect text in real time is pretty cool.