PhD Thesis Defence

Exploiting the Interplay between Visual and Textual Data for Scene Interpretation

I successfully defended my PhD the 08/10/2020 and got an excellent Cum Laude. In this page the thesis pdf, the presentation slides, and a video of the presentation are made available.

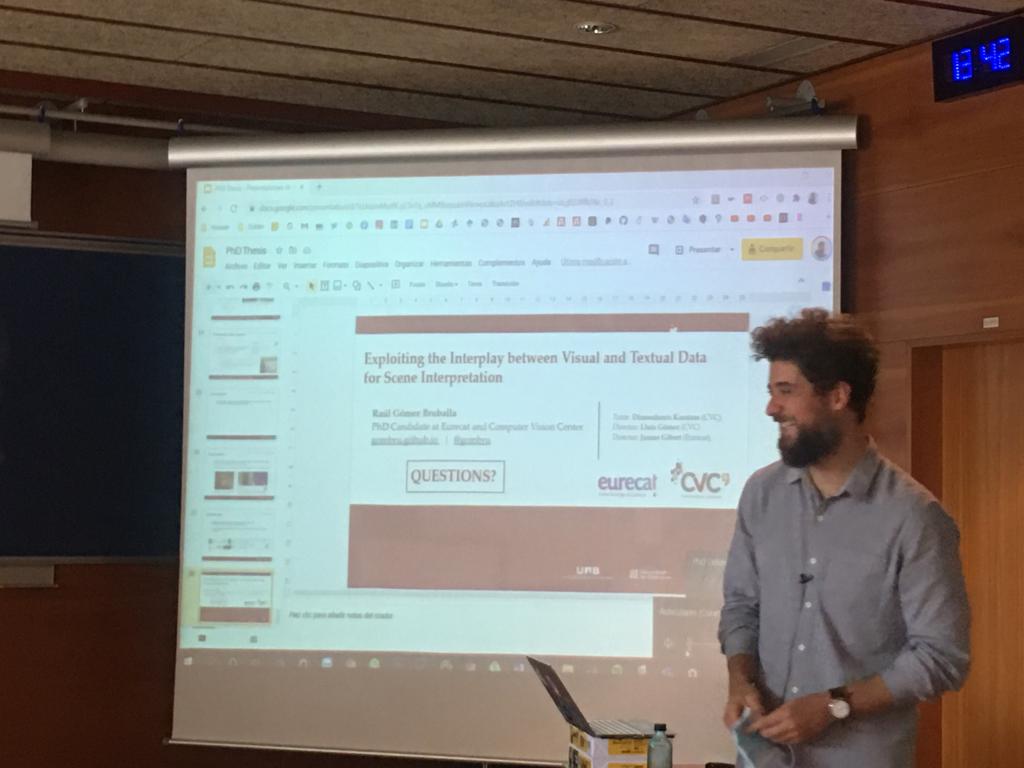

Photos:

Committee:

Dr. Josep Lladós (Centre de Visió per Computador, Universitat Autònoma de Barcelona)

Dr. Diane Larlus (Naver Labs Europe)

Dr. Francesc Moreno (Universitat Politècnica de Cataunya, Institut de Robòtica i Informàtica Industrial)

Thesis Director:

Dr. Dimosthenis Karatzas (Centre de Visió per Computador, Universitat Autònoma de Barcelona)

Thesis Co-Directors:

Dr. Lluis Gómez (Centre de Visió per Computador, Universitat Autònoma de Barcelona)

Dr. Jaume Gibert (Eurecat)

Abstract:

Machine learning experimentation under controlled scenarios and standard datasets is necessary to compare algorithms performance by evaluating all of them in the same setup. However, experimentation on how those algorithms perform on unconstrained data and applied tasks to solve real world problems is also a must to ascertain how that research can contribute to our society.

In this dissertation we experiment with the latest computer vision and natural language processing algorithms applying them to multimodal scene interpretation. Particularly, we research on how image and text understanding can be jointly exploited to address real world problems, focusing on learning from Social Media data.

We address several tasks that involve image and textual information, discuss their characteristics and offer our experimentation conclusions. First, we work on detection of scene text in images. Then, we work with Social Media posts, exploiting the captions associated to images as supervision to learn visual features, which we apply to multimodal semantic image retrieval. Subsequently, we work with geolocated Social Media images with associated tags, experimenting on how to use the tags as supervision, on location sensitive image retrieval and on exploiting location information for image tagging. Finally, we work on a specific classification problem of Social Media publications consisting on an image and a text: Multimodal hate speech classification.